Data Warehouse - Design and Implementation

Introduction and Scope

Data Warehouse 1.0 is Stage One

The soon-to-be-released Mifos Technology Plan will probably have as one of its main goals "Transform Mifos into a best-of-breed business intelligence system for microfinance". Part of this vision involves the development of a data warehouse that contains MFI data from the Mifos application as well as other sources. The scope of this 'stage one' data warehouse initiative (DW 1.0) is to provide the ability to tackle the traditionally 'gnarly' mifos reports.

Focus on High Cost Gnarly Reports

Gnarly reports aren't the 'show me a list of clients and their addresses' type of report. They are the operational and finance reports that show a raft of metrics, transaction totals and arrears aging information at MFI/Branch/Loan Officer/Center levels on current and historical date ranges.

Prior Mifos releases shipped with just a few reports... the Branch Progress report being typical of the gnarly type. As a result, a number of clients have developed their own gnarly reports. (Ryan Whitney, Sam Birney, Sungard are some of the people who have been at the coalface).

Requirements

The purpose of DW 1.0 is to support a number of Operations and Finance 'standard reports' - many very gnarly. Currently, there are 16 reports which are split as follows:

High Priority - 7

Medium Priority - 5

Low Priority -4

While a goal for DW 1.0 is to be able to provide a more generic reporting capability than these specific 16 reports (and allow whatever slicing and dicing can be imagined), the 16 contain typical mifos reporting patterns and concepts and are pretty much the 'business requirements' that have been used for this design and implementation.

The list and mock-ups of the 16 are in a spreadsheet. Rather than link to this spreadsheet (it does change), here's a link to the Loan Officer Detailed Report - Content (No. 1 priority). This gives a good idea of the types of complex reports that mifos and its clients have attempted to build from the mifos production schema over the years:

Reformat

DW 1.0 converts the 200+ mifos database into about 20 tables designed for easy reporting . Some of the reports mentioned above are not supported (partially or fully) by DW 1.0. They are reports that source data external to mifos or the 'show me a list of clients and their addresses' type reports. To get DW 1.0 to support each of these reports can be decided on a case-by-case basis at they are being developed.

Design Stuff

Design in a Nutshell

The main design is just in following the standard dimension modelling (star schema) approach. There are lots of onlines sources about dimensional modelling and star schemas. http://www.rkimball.com/ is a good one because Ralph Kimball is pretty much the industry guru in the area for many years.

- The only data changes that matter to the gnarly reports are hierarchy and status changes. Removing the complexity of identifying hierarchy and status changes for customer and accounts occurs during the ETL process and is reflected as a 'stack' of dim_personnel, dim_customer, dim_loan and dim_savings entries each having a 'valid_from' and a 'valid_to' date pair.

- I've considered branch, loan officer, center, group, and client to be one big hierarchy and collapsed it during the ETL onto all the relevant dim and fact (and hist) tables. So, metrics involving loans and loan officers don't require 'joins' up the hierarchy to get to loan officer, its pretty much already available.

- All the tables (except the generic dim_date one) currently have very few data 'columns'. They are mostly 'keys' and standard audit columns e.g. A central table like dim_customer only has customer_id, display_name, customer_level_id, customer_status and gender. The others have less. This is because... the gnarly reports don't need the data. This approach is not necessarily typical of dimension tables though. Of course, additional columns can be added but I took the approach that if its not needed don't add it yet to keep the database structure very small and easy to report from (not for performance reasons).

Relatively Easy and Consistent Querying

It's relatively easy to write the query for 'What clients were active at a certain period for a branch' e.g. something like:

select count(distinct(c.customer_id)) as number_of_active_clients

from dim_office o

join dim_customer c on c.branch_key = o.office_key

where o.display_name = 'my branch'

and c.customer_level_id = 1

and c.customer_status = 'ClientStatus-Active'

and c.valid_from <= '2010-02-01'

and c.valid_to > '2010-02-01'

This would be quite difficult on the production mifos schema as you'd have to consider the change_log table for customer status changes and the customer_hierarchy table for hierarchy changes (and then you'd worry about clients not in a hierarchy). Also the DW queries tend to have this type of pattern so writing them should result in less mistakes (also can use metadata to simplify).

Data Warehouse Database Design (Link)

Identifying Changed Data in Mifos

Mifos Audit Usage

The key 'changes' that are important for Data Warehouse 1.0 are related to offices, personnel (loan officers), customers (centers, groups and clients) and accounts (loan and savings). Specifically, it's important to pick up hierarchy and status changes. Customer hierarchy changes are the most varied and complex.

A revision of how auditing is done in mifos is on the technology plan. This would impact the data warehouse ETL but would hopefully be more accurate and easy to work with than the current implementation (some notes below).

The specific logic for identifying changes is in the individual ETL jobs in the business intelligence git repository (git://mifos.git.sourceforge.net / mifos/bi) but the key aspects are:-

- change_log/change_log_detail for changes to center/group/client status's as well as loan officer reassignments, branch membership changes and group membership removals

- customer_hierarchy for changes to center/group hierarchies

- account_status_change_history for changes to loan and savings account status's

There are a couple of small bugs associated with the mifos audit list under Issues Outstanding in Data Warehouse 1.0 Non-automated ETL Testing Summary but the main points are the next ones.

Mifos Audits - Possible Improvement Areas

- Customer status changes are localised

The change_log audit captures neither the status_id (preferable) or the non-localised status name. It audits a localised version. This is okay for showing changes to the user in their language but required an ETL step to convert back to non-localised values (only done for English at the moment). - Office hierarchy changes are not audited in mifos

This means that reporting can only take into account the current office hierarchy. If reporting based on historical hierarchy is important e.g. report on branch A under the regional office it was in last year not this year (haven't seen any reports needing it yet but it would be a common data warehouse function to do this) then we would need to do something about it.

a) could change mifos auditing to record these changes

b) could pick up these changes from data comparison (good 'from now on' but previous history is lost and would need to be manually input if required) - Office names are not ensured to be unique in mifos.

The major problem with this is that branch membership change logs show the old and new branch name. So a look up on name is needed to get the branch id. If the name is a duplicate (or if the name has changed as mifos doesn't record name changes to offices) you can't get to a specific id. - Personnel name (and name changes) are not ensured to be unique in mifos

This can affect the loading of the history of customers and their loan officer reassignments. change_log only saves the name of the loan officer. To pick up the personnel_id, the ETL has to match by name. Name changes are audited (entity_type = 17, field_name = 'name') but the 'union' of personnel names (and their history of changes) is not guaranteed to be unique.

The etl job picks on on duplicates when loading a staging table and stops execution so that the data can be fixed.

The best fix is for the mifos audit to have the personnel_id on it for loan officer assignment. Second best and not very neat is to ensure uniqueness of personnel name (and name changes). Third best is to alter any data that has caused a problem as it arises. Manually altering is messy tho as it requires updates to loan officer assignment entries. - Can't determine the order of customer hierarchy and status changes

Auditing on customer_hierarchy and change_log is at a 'day' level. Not a problem by itself. You can determine the order of customer_hierarchy table changes and you can determine the order of customer status changes (on change_log) because of their auto generated id field. However, you can't figure out the combination of both. The upshot of this is that if there are hierarchy and status changes on the same day for a customer then the order in which they happened can't be determined. The order in which the ETL processes changes is New, Hierarchy, Status so it is possible that the following occurs:

A client is created, made active, then moved to another group all on the same day. It will show as 'new client', 'moved to new group', 'made active'.

The main point being a repayment made on that day will link to the new group although it technically is associated with the previous group. Not the end of the world tho. - account_status_change_history entries based on system date rather than transaction date

e.g. if you use collection sheet to disburse a loan, the loan is made active on the date of entry rather than the 'meeting date' which is the transaction date.

If you were to run a query to see which loans were active on 1/1/2009 you may miss a few that were active but not designated as active in mifos. Again, not the end of the world.

Data Quality Related

Its possible that there are some data quality issues with MFI's that have been using mifos over a number of releases due to such things as bugs and migration decisions. Because of this... it's probable that the first time the Data Warehouse ETL is run there will be some data qualities issues to deal with... so allow some time for this.

In one MFI test done, a small % of transactions (savings, repayments, disbursals) occured before the mifos client creation date. Some of these may be valid (e.g. redo loan transactions).

ETL

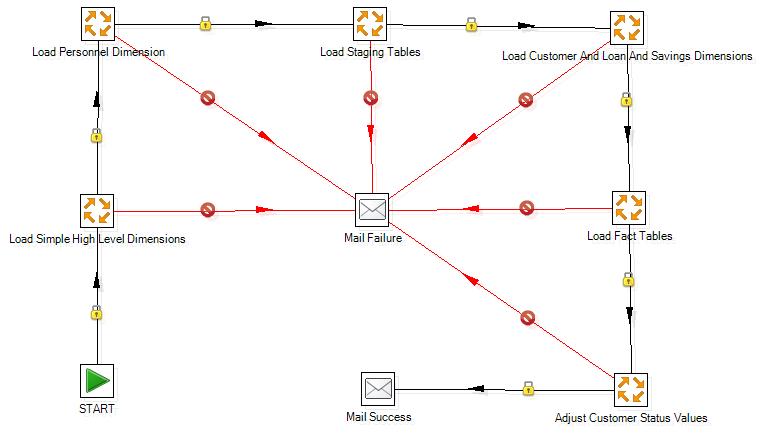

Top Level Pentaho Data Integration Job to Create Initial Data Warehouse